In the past, we’ve discussed server consolidation using virtualization technologies like RHEV. And we know that there are tangible reductions in physical footprint by moving to an enterprise virtualization solution as increased utilization of fewer, more powerful servers with advanced memory capabilities is standard fare for today's data center architectures.

Most of the conversation focuses on the server layer; maybe because it's the most visible and tangible portion or maybe because we system architects have a bias toward particular parts of the stack. Either way we need to stop the server density navel gazing and look at a real implementation problem that must be addressed in our virtualization architectures: storage.

The Data Habit

System architects already know the value (and pain) of enterprise storage, usually centered on databases or large data sets. Single servers can't easily handle enough disks for multi-terabyte, multi-data file working sets generated by workloads like GIS applications. Local spindle counts and speeds can't keep a heavily used transactional database system fed, so we move to high throughput, low latency off-server disk systems. Storage area network (SAN), network attached storage (NAS) and direct-attached storage (DAS) lead the acronym race for the care and feeding of our data habit. Having pushed that work off the server, we are content with our local 200GB 3Gb/s SCSI, SAS and SATA drives to deliver our operating systems and application scratch space.

When we migrate those OS and application workloads from bare metal into a virtual environment, we drag that remaining local storage need with us. But that's fine, right? The heavy-duty workloads are off on specialized storage devices, and we aren't using very much of our super-sized local platters, so what can go wrong with our virtual servers?

Breaking the habit

As we look at our server pools, the very first thing we need to realize is that we are used to dedicated local storage for operating loads. One OS instance has complete usage of a 3Gb/s disk with no contention. With 100 systems to virtualize, that's 100 dedicated spindles of I/O with 300Gb/s of aggregate bandwidth. With 4Gb/s fiber channel cards, we'd need 75 cards to provide that amount of throughput. In an extremely large environment or one that uses RAID 1 as a local performance boost … well, I'll leave the math for you.

We don't always utilize those local disk resources at 100%, so we need to model our actual I/O profile. OS boot, application start up and heavy application loads are the known hard-hitting actions on our drives these days, but what about the rest? Let's say we find our servers are doing about 3-5 IOPS (I/O per second) for general application logging. That's noise on a local disk, but that becomes an additional 3-500 IOPS in our consolidated virtualized 100 server workload. Failing to fully map the I/O requirements for each server moving into the virtual environment can seriously hamstring your consolidation efforts.

This means virtual environments need to be designed around three axes:

- CPU power

- Memory usage

- IOPS

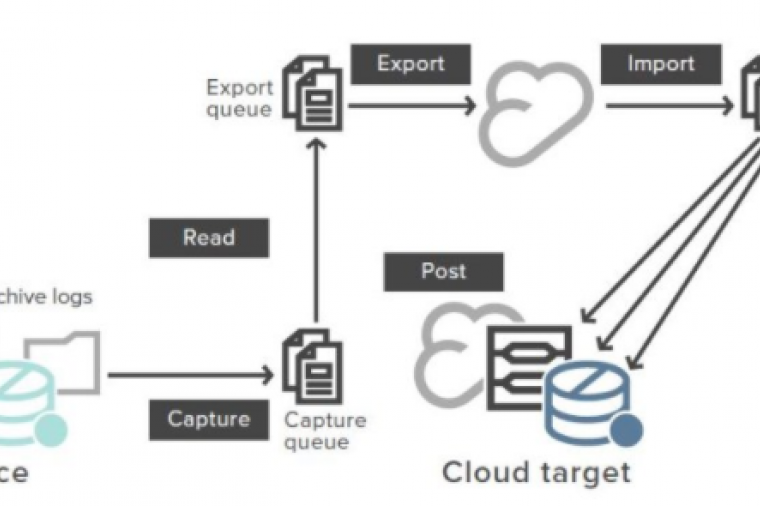

The storage axis is easily neglected but is just as critical to the performance and health of your virtualization solution. High speed, low latency, and high throughput enterprise storage devices are as critical to the mix as the high memory, fast multi-core servers. High speed caching, high spindle counts and flexible protocol delivery are all features of enterprise storage that can make your virtualization efforts more capable and stable. We can take advantage of advanced features of enterprise storage devices like geo-replication and snapshots to improve our disaster recovery scenarios. Perhaps it’s another personal bias from ‘the UNIX way’ of tool chaining, but a series of specific tools that work together always works better than a broad spectrum single tool built around general cases and least common denominators. Using a software API to communicate with a hardware device allows each layer to focus on what they do best, a lesson the backup world taught us years ago.

By combining highly capable components, like RHEV and NetApp Filers, you can build a scalable solution that can grow as your demands increase.